Collaborative AI helps combat bias by providing equal access and visibility into models.

Illustration by Pablo Stanley

Words by Jack Riewe

No one wants bias in their organization. Underrepresentation has plagued the business world for years and many fear bias is making its way into the artificial intelligence industry. While AI and machine learning are very technical, scientific subjects, they can succumb to human error. Collecting data—while immensely important—is an evolving practice and room for bias is still very much a part of the data prediction process.

Areas in Machine Learning Vulnerable to Bias

Training Prediction Models

Input and output. Both are areas that need governance, but when building a model you should clearly be able to explain what the model does and the expected outcome of the model. It’s important to remember the developers of the prediction model have their own backgrounds and blind spots when it comes to writing algorithms.

Author Tom Taulli wrote for Forbes, “Additionally, studies have shown that algorithms trained on historically biased data have significant error rates for communities of color especially in over-predicting the likelihood of a convicted criminal to re-offend which can have serious implications for the justice system.” This can translate to biased algorithms making the wrong decisions for your business.

Lack of Representation in Datasets

Not only is representation in your data subjects important, but also those analyzing and managing datasets should be diverse. According to a recent research report from NYU, “women comprise only 10% of AI research staff at Google and only 2.5% of Google’s workforce is black. This lack of representation is what leads to biased datasets and ultimately algorithms that are much more likely to perpetuate systemic biases.”

In this video, “coding poet” Joy Buolamwini describes how she was excluded from facial recognition technology because not enough diversity was included in the dataset.

Designing an Unbiased Data Prediction

Define the Business Problem and the Dataset

Stating the business problem and the desired outcome is the first step to designing an unbiased data prediction. This will guide your data collection process and decide what attributes you need when making a prediction.

Let’s say the prediction you want to make is “What is the persona of a person most likely to commit fraud?”

Building personas of customers most likely to do something requires collecting demographic data. If you were to collect data from all men, the persona most likely to commit fraud would be men. It’s important to take a prediction like this and ask your team, “What data do we have to collect to make this prediction 100% accurate?”

If it isn’t as close to 100% accurate as possible, the prediction might lead you on a wrong course and your messaging might include bias language, your sales techniques might be off, or how you optimize your business might lead you down the wrong path and take away from revenue.

Relating to defining the business problem, you also need to be able to state the desired outcome of data collection. For example: We want to collect data from the most users we can to create a relationship between their attributes and the chances of committing fraud. A statement like this can serve as your north star for collecting data.

Before deciding what data to collect avoid sample bias and non-response bias.

Sample Bias - Only reaching out to a portion of your audience.

Non-Response Bias - Only a small part of your audience responds to your survey, forum, etc.

Audience segmenting can be advantageous in terms of messaging and offerings but collecting data from a small segment of your audience to make general predictions about your user base can lead to skewed data predictions.

Here’s a great post on the importance of having large datasets.

A Big Part of Bias Datasets are Simply Not Having Enough Eyes on the Data

When you have a usable dataset, your team should be able to define what content the dataset carries and ask themselves if enough users with various attributes are represented.

Your team should also ask themselves if the way the data was collected was fair. Meaning, was the user pressured in any way to answer the question? Did the questions asked make sense? Was the user swayed to answer questions in certain ways?

After you answer those questions, look at the team overseeing the data. Is there enough diversity on your team with different backgrounds who can collectively look at this dataset and say it’s unbiased?

The data collection and prediction modeling process should be a collaborative process

How Collaborative Machine Learning Combats Bias

While researching ways to fight biased data predictions, I found that the biggest problem is data governance and a lack of diversity of those overseeing the data prediction process. One of the articles I came across was how great bosses avoid bias by implementing equal access. Collaborative ML provides equal access.

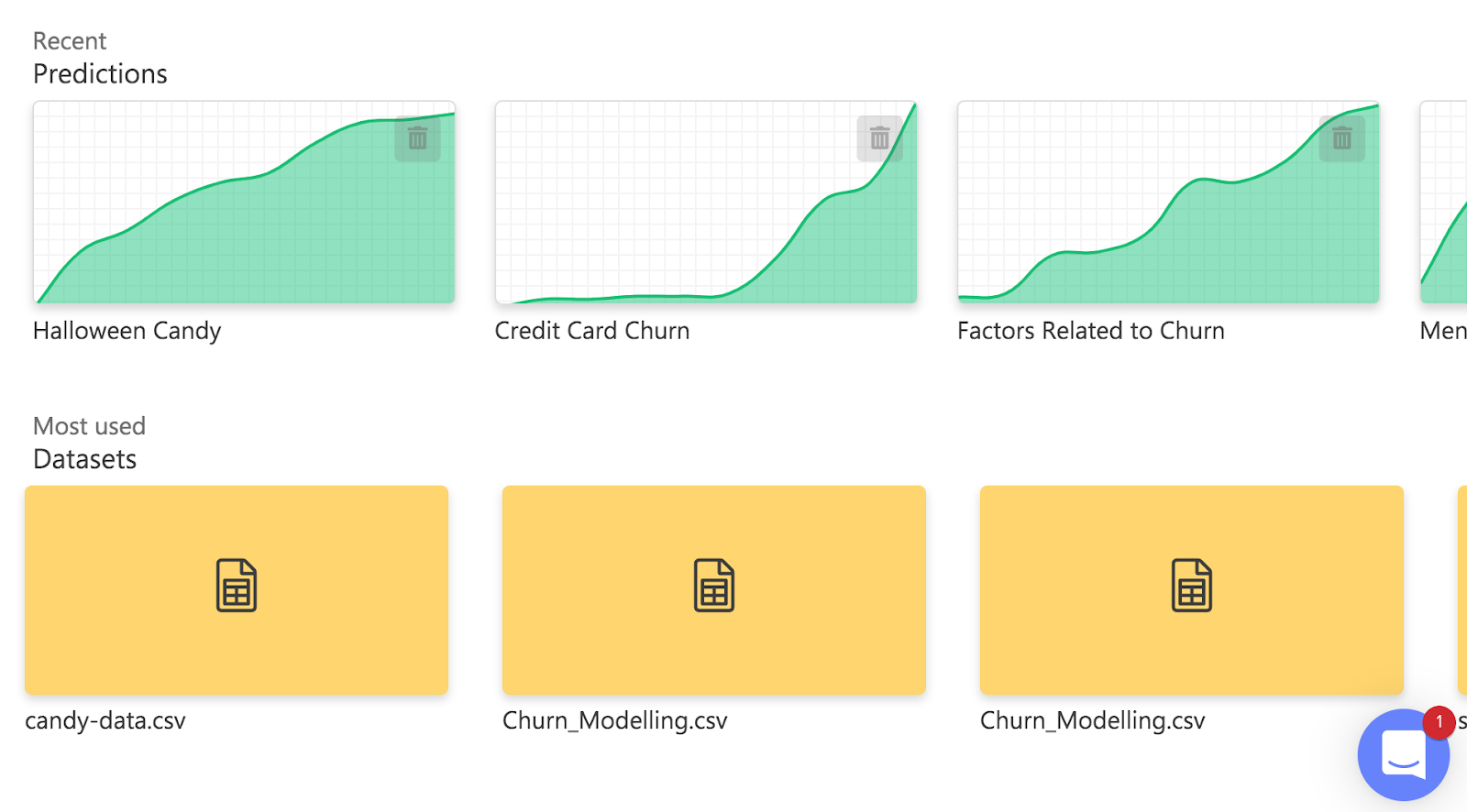

Collaborative machine learning can help provide access to all the members of the team to ask questions collectively and record queries and outcomes.

With collaborative machine learning, the transparency of your data predictions increases to avoid bias. This is a great way to increase governance in machine learning.